Metadata streams

Axis devices have three different metadata streams; PTZ, event, and analytics stream. You can read more about each stream on this page.

- To view the streams, you can use AXIS Metadata Monitor tool.

- More information about metadata streams is also available in the AXIS OS portal.

PTZ metadata stream

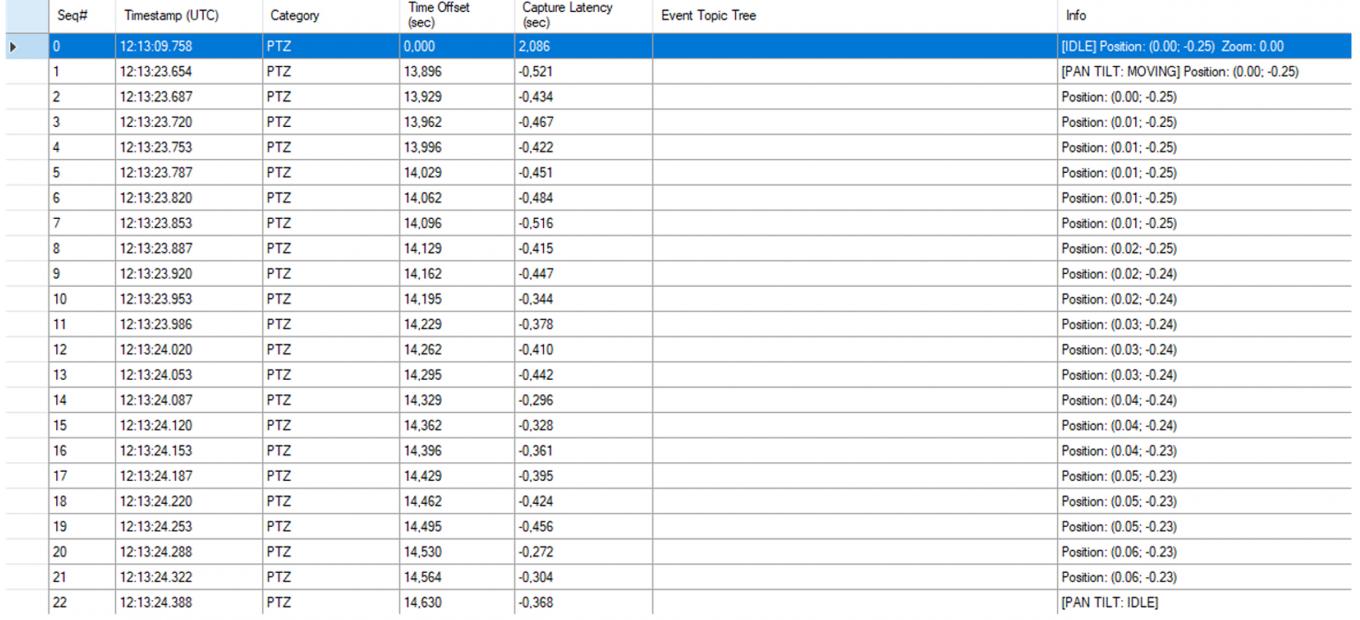

The PTZ stream delivers the data corresponding to the actual PTZ moves. It can be received by appending "ptz=all" in the RTSP URL. Below is a sample flow of PTZ move action. It only delivers data if there is movement. Otherwise it's silent.

PTZ move action

Sample flow of PTZ move action:

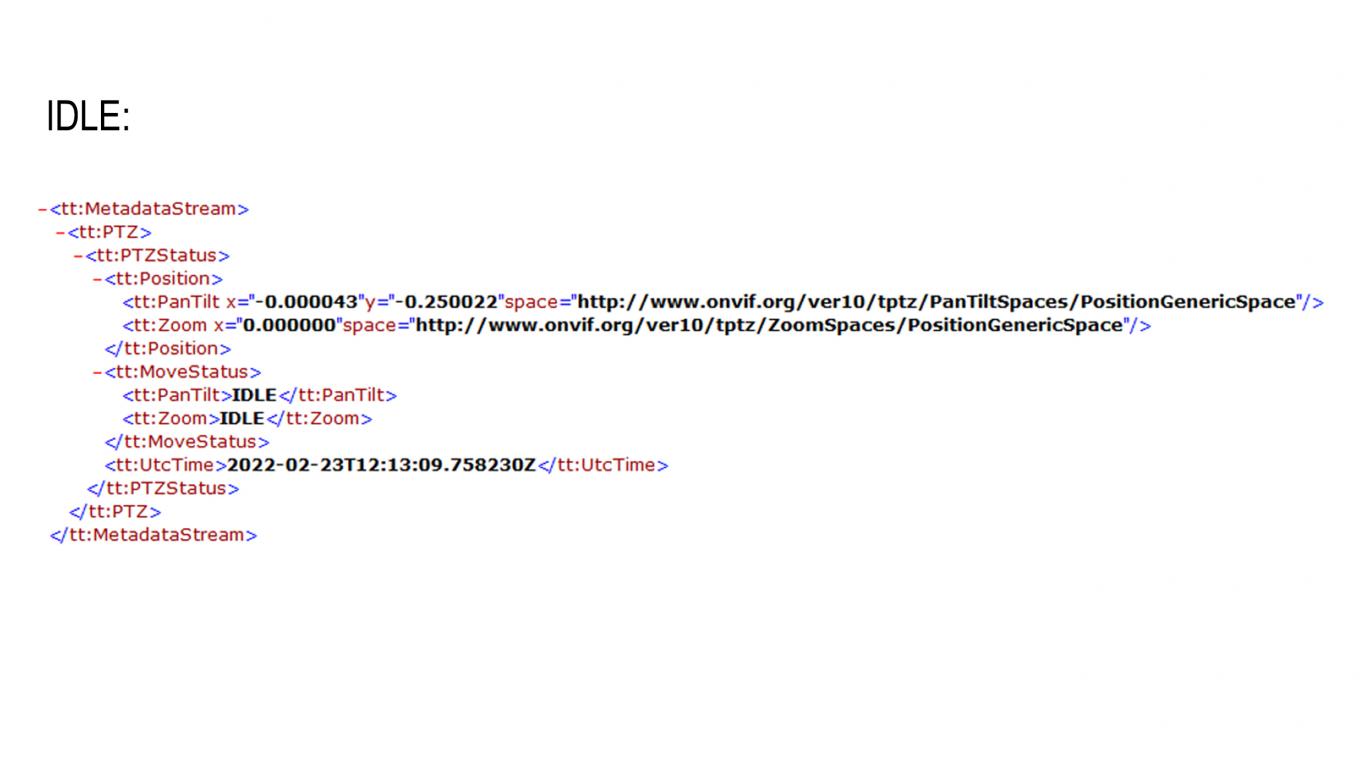

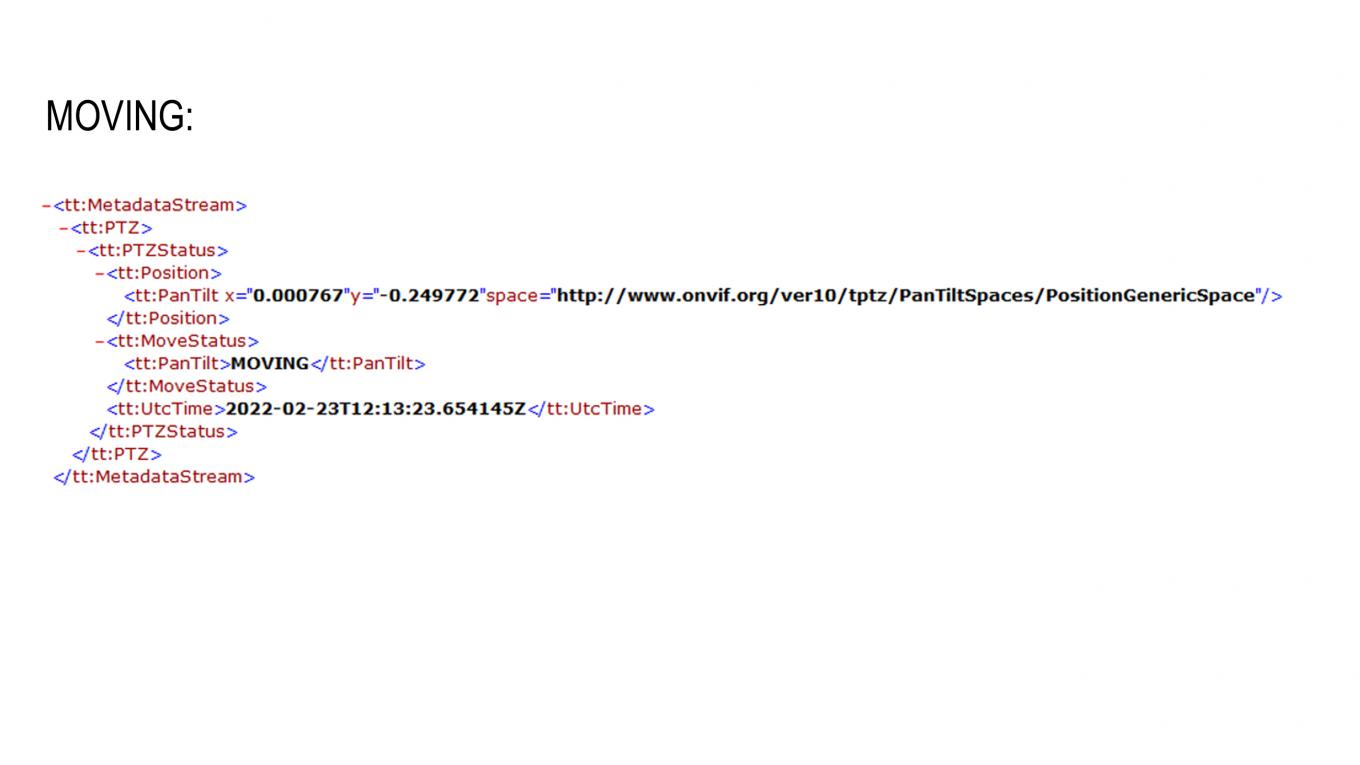

Sample packages

Here are some sample packages showing idle, moving, and position:

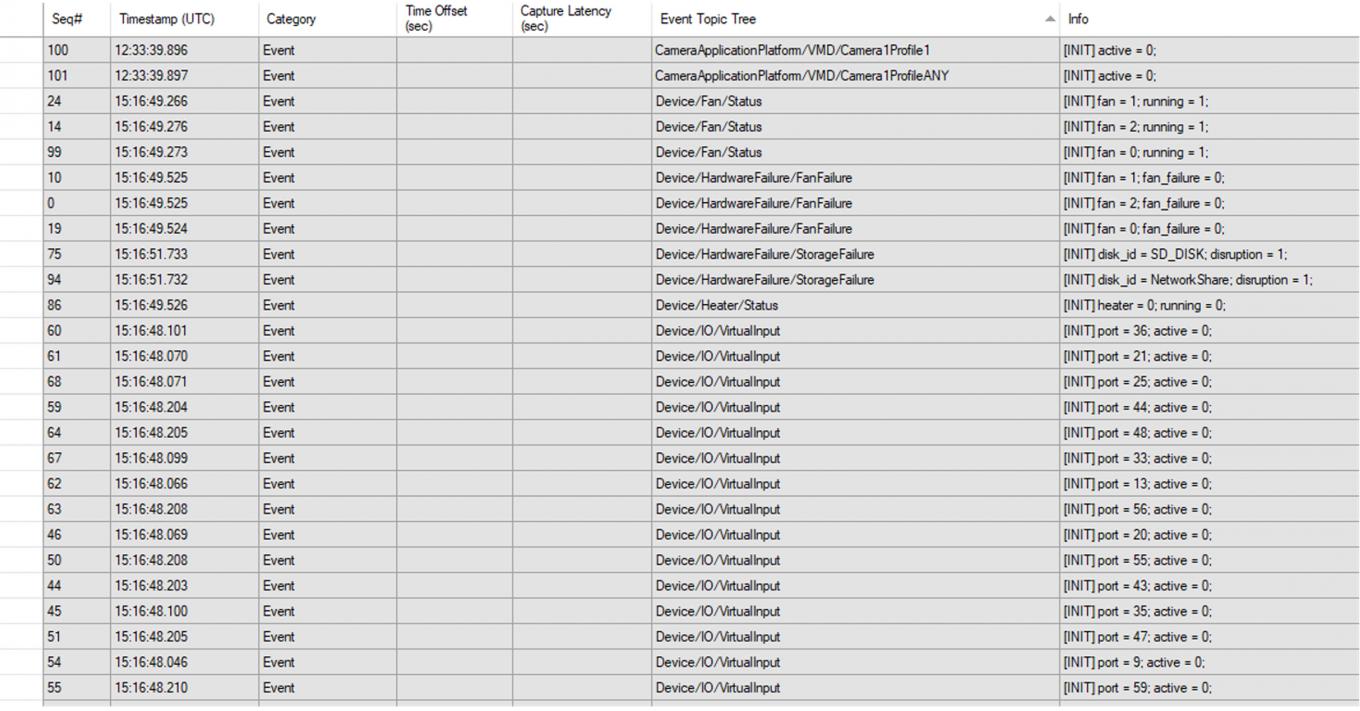

Event stream

The event stream shows all the events coming from a device. These could be I/O events, temperature events based on the operating conditions of the device, status of the PTZ presets, status of network or storage connection, ACAP events, etc. It can be received by appending "event=on" in the RTSP URL. It's like a global stream that is shared among the device channels. In other words, if you have a multisensor unit or different view areas, event stream would be identical from all channels, since I/O or other properties are shared. It only delivers data if there is movement. Otherwise it's silent, just like the PTZ stream. It's not meant to be used for sharing data, but more on the trigger information. It's also good for live alerts.

Output

Sample:

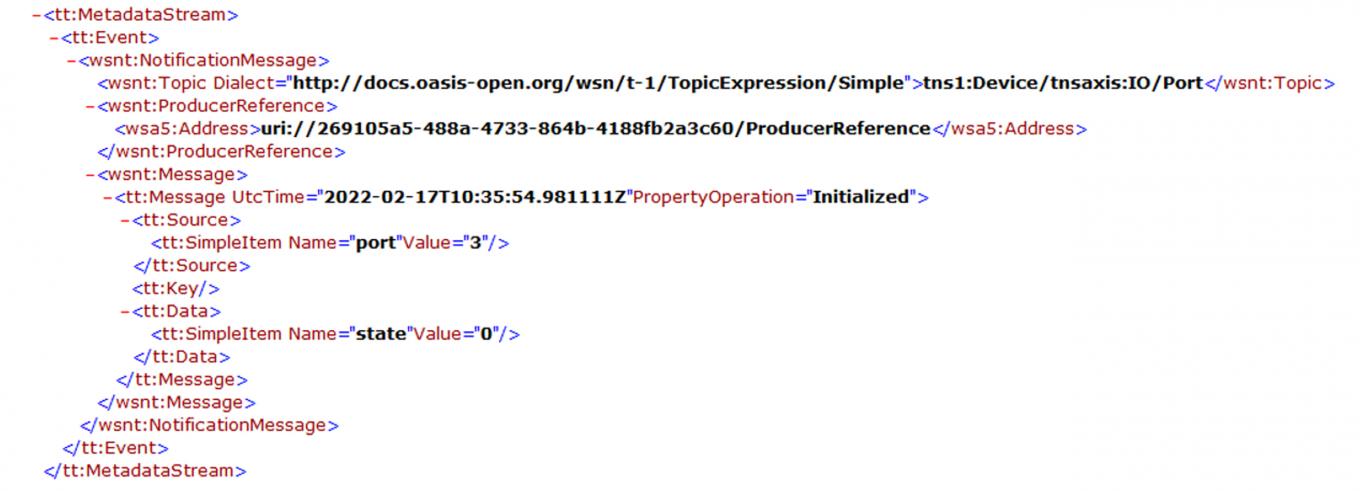

I/O

Sample package:

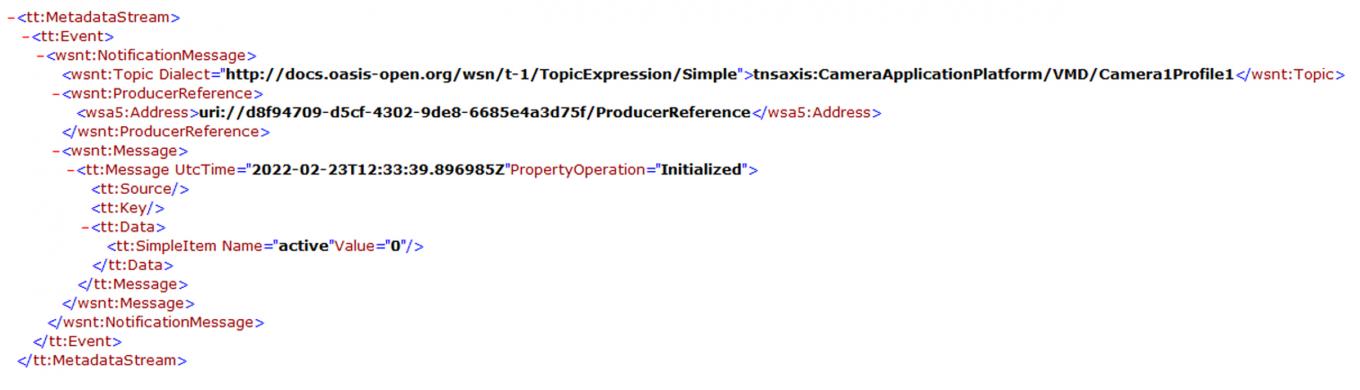

VMD4

Sample package:

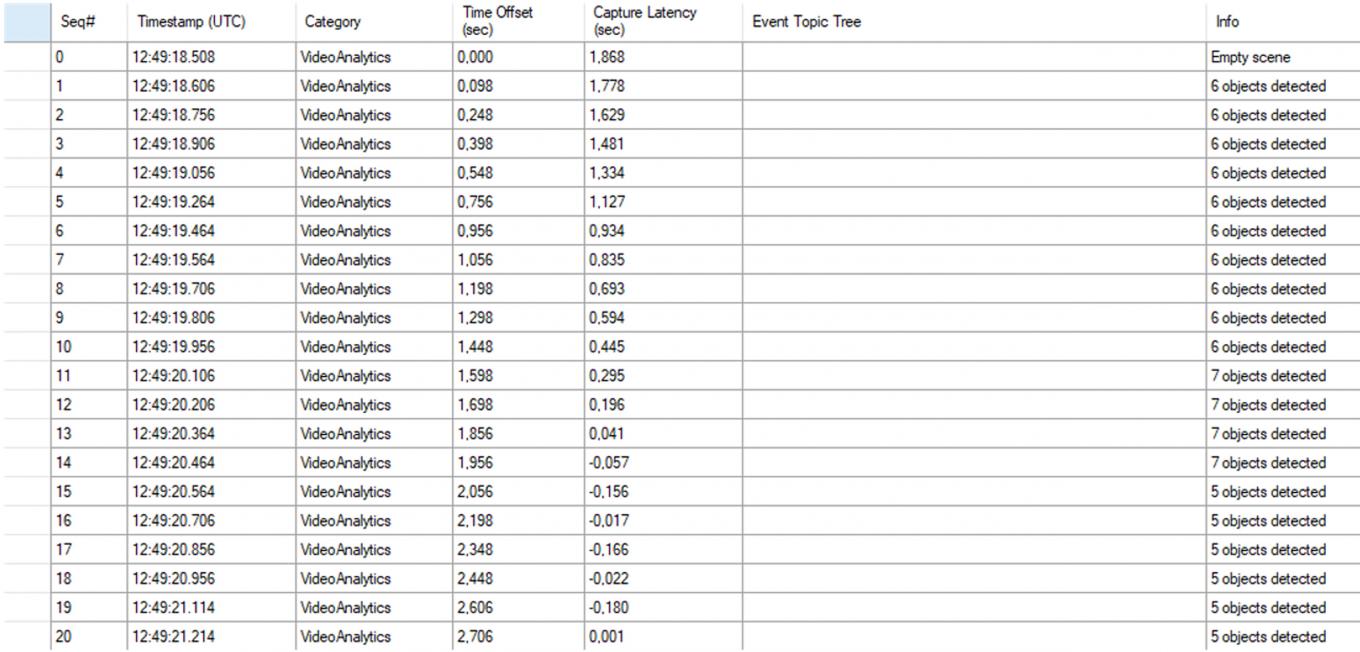

Analytics stream

The analytics stream is different from metadata streams. It's a real ongoing stream, meaning that it constantly delivers packages even when there's no activity. It's designed for sharing data and it's good for post actions.

To support use cases where analytics metadata is a requirement, for example forensic search, Axis has made available analytics metadata as part of later firmware releases. Analytics metadata was first introduced in AXIS OS version 9.50 (9.80 LTS AXIS OS release has the same version as 9.50), and with AXIS OS 10.6 object classification metadata was added.

Output

Sample:

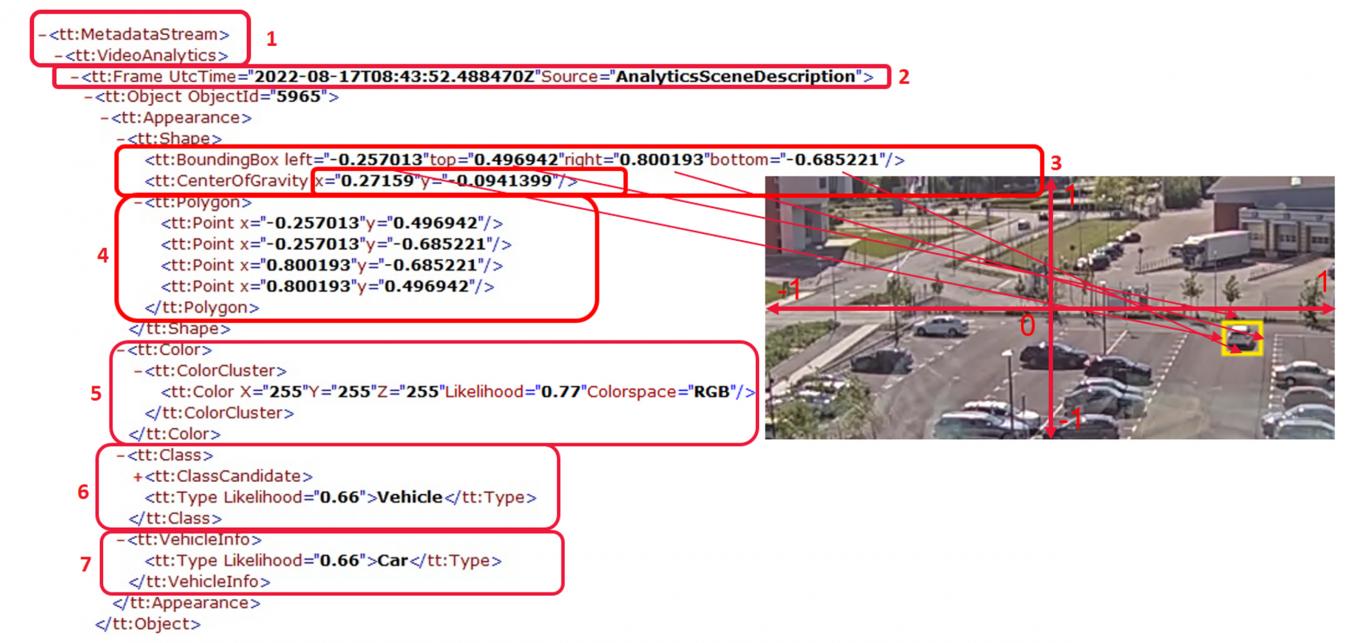

Axis devices produces analytics metadata based on the ONVIF profile M format

You can see explanations of what it contains below. (This example is updated for AXIS OS 11.2 and ARTPEC-8 camera).

1. Like the other streams, it has its own branch called "VideoAnalytics"

2. It has timestamps like any other stream. It is crucial to sync with video (or audio) when you play or query. In addition, compared to other streams, it has a source field. The source field name changed from "objectsanalytics" to "AnalyticsSceneDescription" in AXIS OS 11.0.

3. Information for bounding boxes and polygons, represented in ONVIF coordinate system which is -1 to 1 in the X and Y axes.

4. Bounding boxes and polygons are currently the same if you use Analytics Scene Description as a source.

5. Represents the color (of the car) and the probability value of how accurately the device guesses the color. The color is presented before object class due to the ONVIF Profile M format. Object class can be found in section 6.

6. Object class (Human or Vehicle) and probability value of how accurately the device guesses the object, such as vehicle in this example.

7. In addition to the main category, it presents a sub-category, such as a car in this example.

Motion Object Tracking Engine as source

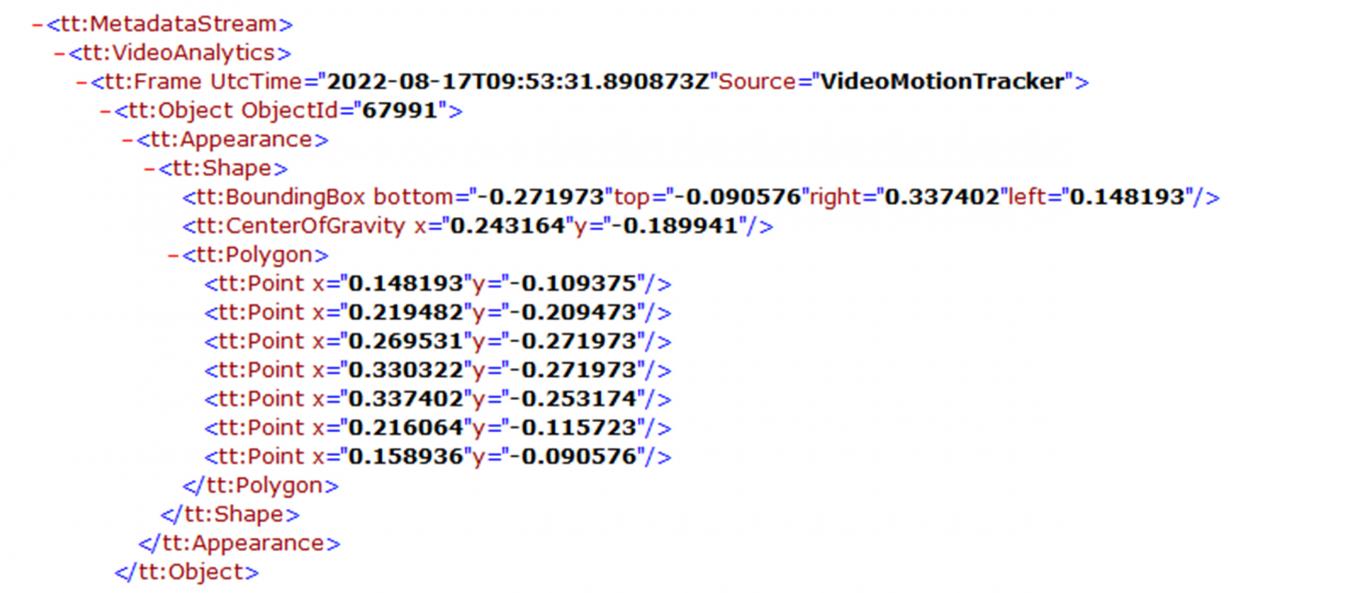

Below is a sample package that has "Axis Video Motion Tracker" as a source. It has real polygons consisting of many points. Since AXIS OS 10.9 it also has a source value.

No activity in scene

Here is a sample when there's no activity in the scene:

Type of metadata on your device

The type of metadata also depends on the device. For example, specific camera models are required to receive object classification metadata.

- As Axis introduces new devices with more processing power and deep learning capabilities, most of the portfolio will produce object classification metadata.

- Analytics metadata created by radar devices and radar-video fusion camera devices are explained in the respective integration guidelines.

| AXIS OS 11.5 | Upper and lower clothing color has been added as an attribute to the human object class within the analytics scene description metadata stream. Read more |

| AXIS OS 11.1 | Vehicle color is included in the Axis analytics metadata stream. Read more |

| AXIS OS 11.0 | The source parameter in the Axis analytics metadata stream changed to "AnalyticsSceneDescription". Read more |

| AXIS OS 10.11 | For new products and after defaulting the devices, AXIS Object Analytics will become the default metadata producer. Old configured systems will work as it is once you upgrade to AXIS OS 10.11.

|

| AXIS OS 10.10 | Restructure of AXIS Object Analytics and Motion Object Tracking Engine (MOTE) metadata producers. AXIS Object Analytics ACAP is no longer the producer for object classification metadata. The metadata from the Axis Object Analytics provider will add two new classifications and associated bounding boxes for:

Note: The additional classifications will only be available on DL cameras. For releases after AXIS OS 10.10, it is good to be aware that:

For more information, see changes in Metadata Analytics stream AXIS OS 10.10. |

| AXIS OS 10.9 | Motion Object Tracking Engine (MOTE) data got a source name. The main difference is Source=VideoMotionTracker, the rest is the same. See example package. |

| AXIS OS 10.6 | With the release of Axis OS 10.6, you are able to retrieve object classification data, e.g. humans and vehicles. To be able to retrieve object classification data, you need a device that has support for Axis Object Analytics. AXIS Object Analytics requires ARTPEC-7 or higher and, a certain amount of memory. |

What about cameras that don't support Axis Object Analytics?

Such devices will still produce metadata but without object classification. This means that you will get Object ID, bounding boxes, center of gravity, and polygons. Axis refers to this data as MOTE (Motion Object Tracking Engine) data.

In AXIS OS 10.6, the device handles the rotation of the metadata. Before 10.6, the metadata stream needs to be rotated according to the camera rotation. For more accurate conversion, you can use the “transform matrix” in SDP.

What are the benefits of introducing analytics metadata?

We made these changes to get ONVIF Profile M conformance and to help support use cases related to the forensic search.

The primary use case for the current analytics metadata is forensic search. Depending on the filters you apply, you may use this data for other purposes too. Metadata rotation is another improvement that simplifies integration. Also, this data is ONVIF profile M conformant. It is supported by not only the high-end models, but also many other camera models.

What should developers take into consideration?

The metadata analytics stream is delivered via the RTSP stream with a special parameter. You need to append “analytics=polygon” in your RTSP request.

For example:

- Neither video nor audio, just metadata enabled analytics stream

rtsp:///axis-media/media.amp?video=0&audio=0&analytics=polygon

- The available video&audio in camera=1 channel and metadata analytics stream

rtsp:///axis-media/media.amp?camera=1&analytics=polygon

- Video, audio and all metadata streams

rtsp:///axis-media/media.amp?video=1&audio=1&analytics=polygon&event=on&ptz=all

Time synchronization is vital in this implementation, so the same NTP server should be used in the camera, clients, and the VMS server.

While implementing forensic search, you should pay attention to “Source” and “Object ID” in the XML document. Object ID may disappear and appear again depending on the scene. In 10.6, MOTE data did not have a "Source" field. In 10.9 and later, VideoMotionTracker is added as a source. AXIS Object Analytics data has “=objectanalytics”. NB! Not every frame contains object classification when AXIS Object Analytics is activated, it depends on the scene. While merging the query results, object classification is not reliable, the Object ID is more reliable. However, Object ID may change as well but you can follow it via Object tree specification.

Another important point to keep in mind is that the analytics metadata stream provides "raw" data that you need to filter while querying. It constantly delivers XML files even if there is nothing going on in the scene. It is not good enough to use directly like normal event data in the event stream, where event stream only sent packages once something occurs.